Lessons Learned Developing Text Ads Generation

The lessons I learned from developing a text ads generation project using language models the past over 3 years.

FlaxGPT

FlaxGPT is a simplistic Flax implementation of GPT (decoder-only transformer) model. Supports loading LLaMA2 checkpoints and make predictions.

LLaMA Code Analysis

Learn how modern transformers work by analyzing the official implementation of LLaMA

Stable Diffusion Deep Dive Series

A series of experiements in learning Stable Diffusion

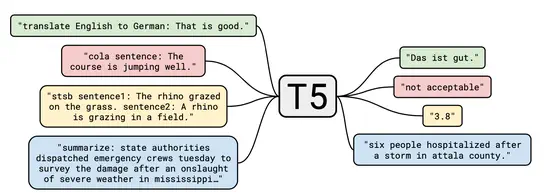

T5 Paper Notes

T5 is an encoder-decoder transformer. It utilizes multi-task learning in the training process. By studying the T5 paper, we can learn many interesting details about pre-training of transformer models.

NesGym

NESGym was a personal project that I undertook after being captivated by the groundbreaking achievements of AlphaGo. The advent of Reinforcement Learning (RL) garnered significant attention in the machine learning community during that time. Notably, OpenAI introduced a gym environment to facilitate RL experimentation. As an avid Nintendo enthusiast, I found it intriguing to create an environment for the Nintendo Entertainment System (NES) emulator as well.